This research is driven by our curiosity about the limits to which the current technology for natural language processing could be pushed: if humans are capable of reading jumbled sentences, can machines do? To answer this question, we evaluate the ability of state-of-the-art models to recognize and comprehend wordand character-level...

[Read More]

Decision-Making with Bayesian Experts

Can we surpass the best expert?

This research project studies a novel online decision-making problem of choosing the correct action each round given access to experts that are knowledgeable, rational, and truthful, rather than arbitrary. We ask, in this setting, whether we can surpass the best expert using voting-based algorithms when the experts provide additional information...

[Read More]

Imitation Refinement

A Bridge Connecting the Real and Idealized Worlds

We propose a novel approach of imitation refinement, which improves the quality of imperfect patterns, by mimicking the characteristics of ideal data. We demonstrate the effectiveness of imitation refinement on two real-world applications: in the first application, we show imitation refinement improves the quality of poorly written digits by imitating...

[Read More]

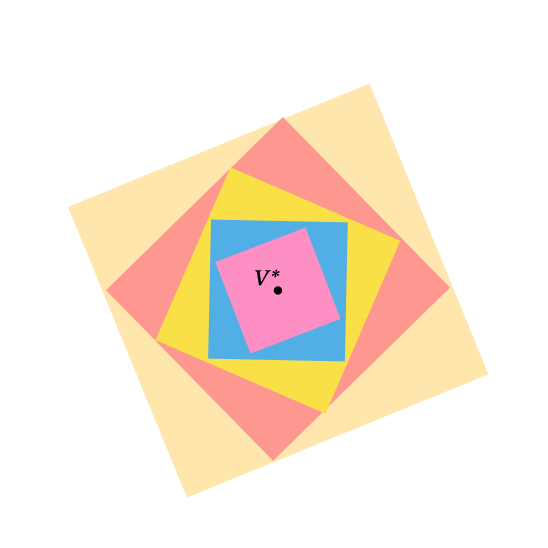

How Does Value-Based Reinforcement Learning Find the Optimal Policy?

A General Explanation from Topological Point of View

DeepMind researchers claimed state-of-the-art results in the Arcade Learning Environment in their recent ICML paper “A Distributional Perspective on Reinforcement Learning”. They investigate a distributional perspective of the value function in the reinforcement learning context and further design an algorithm applying Bellman’s equation to approximately learn value distributions, which results...

[Read More]

Pedagogical Value-Aligned Crowdsourcing

Inspiring the Wisdom of Crowds via Interactive Teaching

Nowadays, crowdsourcing becomes an economical means to leverage human wisdom for large-scale data annotation. However, when annotation tasks require specific domain knowledge that people commonly don’t have, which is normal in citizen science projects, crowd workers’ integrity and proficiency problems will significantly impair the quality of crowdsourced data. In this...

[Read More]